CUDA Ray Tracer

Ray tracing renderer written in CUDA and C++

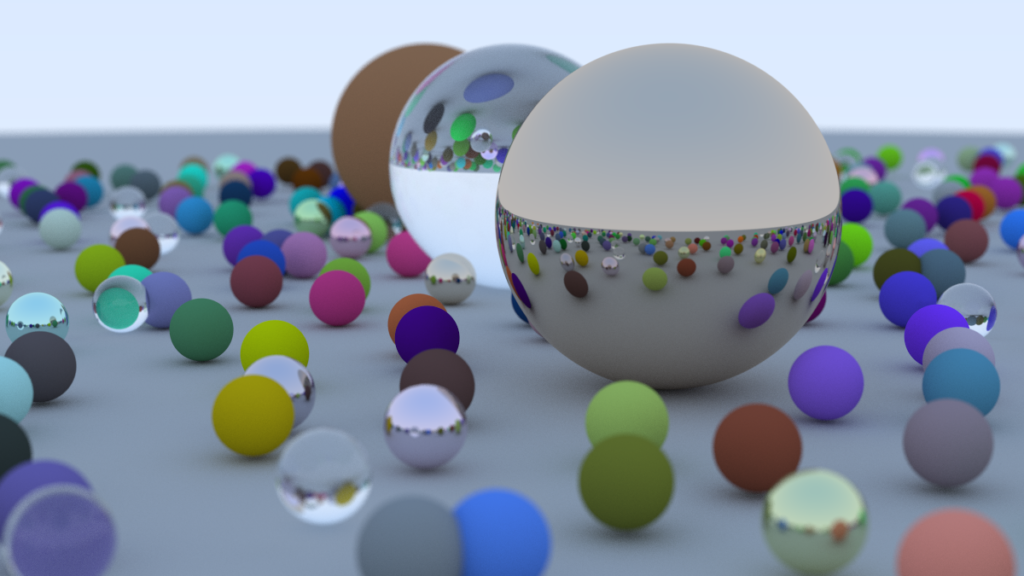

The final render using the same settings used for the tutorial cover image

Peter Shirley’s Ray Tracing in One Weekend tutorial was followed as a starting point for the CPU side and translated into CUDA to run on GPU with the help of the NVIDIA Technical Blog Accelerated Ray Tracing in One Weekend in CUDA by Roger Allen.

CUDA is a language developed by NVIDIA used to perform highly parallel mathematical calculations using graphics cards. The language is modeled after C++ and except for GPU specific primitives can compile using a normal C++ compiler to run on CPU or compile into PTX using nvcc to run on GPU. The majority of the ray tracer is written in a way that allows both compilation methods with stubs for the CPU and GPU that compile only with there respective compiler but call the shared functions. This makes it easy to enable both software and hardware rendering to aid debugging and compare performance. For ease of use on windows, I added the ability to save the rendered image as a PNG with the stb library. Download stb_image.h and stb_image_write.h and place them in an additional includes directory.

View the source code at: https://github.com/Andrew-Moody/CUDA-Ray-Tracer

If you are wondering why I chose to implement most of the code in headers, it is because CUDA’s support for link time optimizations is not (or was not) as good as typical C++ compilers. The majority of functions are small mathematical operations like vector math that wont get inlined when they otherwise would if implemented in a separate compilation unit. If the project grew significantly, trade offs can be made to improve maintainability.

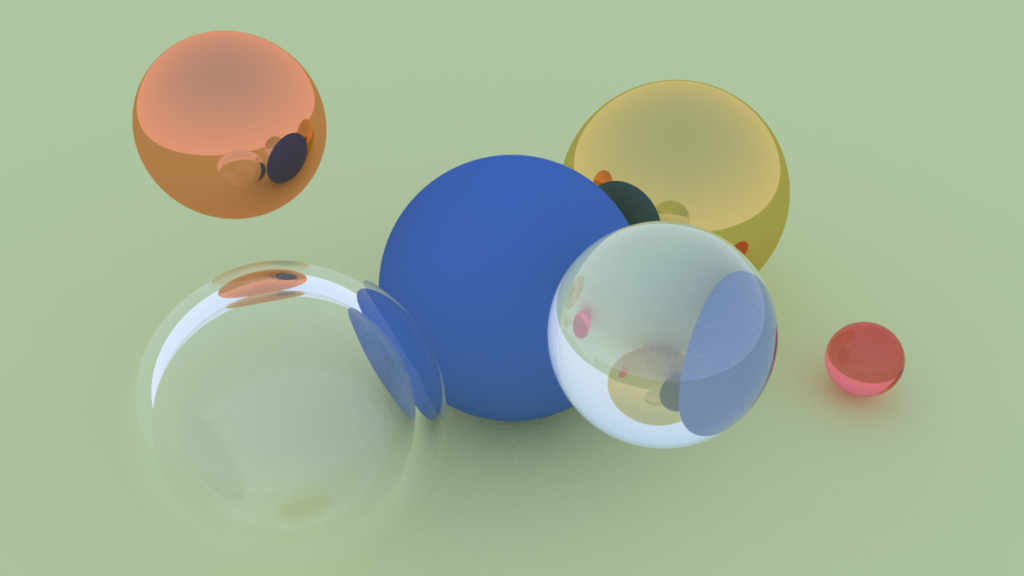

An example showing refraction in more detail. Left sphere is hollow glass

Performance Optimization

I was able to improve the render time for the cover image from 63 seconds to 33 seconds (less than 3 seconds for the second image) on a GTX 1060. Some of the more impactful steps taken where:

Reducing the amount of global memory that must be accessed to check a hit:

- Removing the need to check if a sphere is visible when checking for a hit either by sorting the sphere list by visibility or removing the option to generate non-visible spheres. (non-visible spheres was an option I added for generality but is not needed for this demo)

- Separating the center and (squared) radius into its own class to make the data more contiguous. Could go a step further and try to vector load a float4 but I am not sure about potential tradeoffs with register usage.

Increasing resident threads by reducing register usage:

- Switching random number generation from the stateful cuRAND library to the “stateless” PCG hash function reducing global memory access and register use.

- Pre normalizing camera rays to simplify hit checking.

- Using an index instead of a pointer when finding the closest sphere.

- Eliminating accidental use of double in place of float, especially for c math functions.

- Making use of launch bounds to cap register use when no further reduction could be found. Fortunately, this did not result in spillover.

- Enabling the -use_fast_math compilation option reduced registers in this case.

Recommendations for further improvement

Memory dependency is the main bottleneck at this point. The majority of computation time takes place in the hit detection code where every thread needs to read the same data and where this data is too large to fit a copy in shared memory. A potential approach might be to rearrange the kernel to use multiple threads per pixel and have threads stride over the collider list to perform hit checks. This might help scaling with more scene objects in a brute force way. Typically, applications involving collision detection would use a hierarchical data structure to efficiently check for hits in less than linear time. While outside the scope of original tutorial it is a topic covered by the series. It would be interesting to see how this approach could be adapted for a parallel environment. A potential drawback would be that access to various parts of the data structure would be random depending on the origin and direction of the rays making it difficult to ensure orderly access but there may be clever ways around this.